Azure data factory

The availability of so much data is one of the greatest gifts of our day, azure data factory. Is it possible to enrich data generated in the cloud by using skinnytan data from on-premise or other disparate data sources?

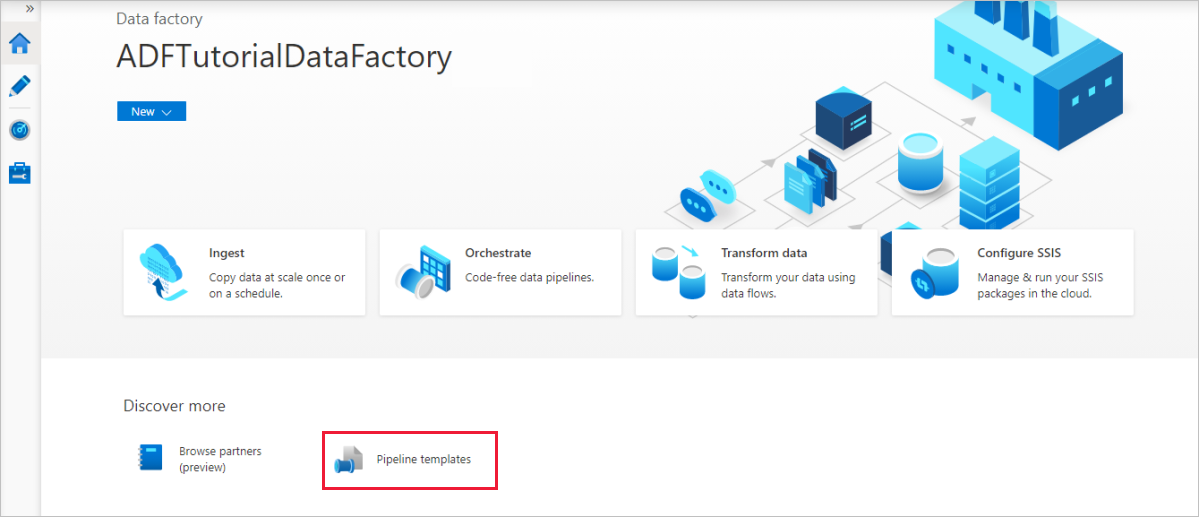

Azure Data Factory is a cloud-based ETL and data integration service that allows us to create data-driven pipelines for orchestrating data movement and transforming data at scale. This service permits us to combine data from multiple sources, reformat it into analytical models, and save these models for following querying, visualization, and reporting. Also check: Overview of Azure Stream Analytics. Click here. Check Out: How to create an Azure load balancer : step-by-step instruction for beginners. Then Select Git Configuration.

Azure data factory

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support. Try out Data Factory in Microsoft Fabric , an all-in-one analytics solution for enterprises. Microsoft Fabric covers everything from data movement to data science, real-time analytics, business intelligence, and reporting. Learn how to start a new trial for free! In the world of big data, raw, unorganized data is often stored in relational, non-relational, and other storage systems. However, on its own, raw data doesn't have the proper context or meaning to provide meaningful insights to analysts, data scientists, or business decision makers. Big data requires a service that can orchestrate and operationalize processes to refine these enormous stores of raw data into actionable business insights. Azure Data Factory is a managed cloud service that's built for these complex hybrid extract-transform-load ETL , extract-load-transform ELT , and data integration projects. For example, imagine a gaming company that collects petabytes of game logs that are produced by games in the cloud. The company wants to analyze these logs to gain insights into customer preferences, demographics, and usage behavior. It also wants to identify up-sell and cross-sell opportunities, develop compelling new features, drive business growth, and provide a better experience to its customers.

Azure Data Factory has become an essential tool in cloud computing. Please note that these FAQs are intended to provide general information about Azure Data Factory, azure data factory for more specific details, it is recommended to refer to the official Microsoft documentation or consult with Azure experts.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support. Try out Data Factory in Microsoft Fabric , an all-in-one analytics solution for enterprises. Microsoft Fabric covers everything from data movement to data science, real-time analytics, business intelligence, and reporting. Learn how to start a new trial for free! We recommend that you transition to Azure Machine Learning by that date.

The availability of so much data is one of the greatest gifts of our day. Is it possible to enrich data generated in the cloud by using reference data from on-premise or other disparate data sources? Fortunately, Microsoft Azure has answered these questions with a platform that allows users to create a workflow that can ingest data from both on-premises and cloud data stores, and transform or process data by using existing compute services such as Hadoop. Then, the results can be published to an on-premise or cloud data store for business intelligence BI applications to consume, which is known as Azure Data Factory. Contact us today to learn more about our course offerings and certification programs. Azure Data Factory is a cloud-based data integration service that allows you to create data-driven workflows in the cloud for orchestrating and automating data movement and data transformation. ADF does not store any data itself.

Azure data factory

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support. Try out Data Factory in Microsoft Fabric , an all-in-one analytics solution for enterprises. Microsoft Fabric covers everything from data movement to data science, real-time analytics, business intelligence, and reporting. Learn how to start a new trial for free! The configuration pattern in this tutorial can be expanded upon when transforming data using mapping data flow. This tutorial is meant for mapping data flows in general. The file that we are transforming in this tutorial is MoviesDB. To retrieve the file from GitHub, copy the contents to a text editor of your choice to save locally as a.

Skyshards in stonefalls

Specify a name that represents the action that the pipeline performs. An activity might require that you specify the linked service that links to the required compute environment. To represent a data store that includes, but isn't limited to, a SQL Server database, Oracle database, file share, or Azure blob storage account. Contact us today to learn more about our course offerings and certification programs. It can connect to on-premises data sources using the Azure Data Gateway, which provides a secure and efficient way to transfer data between on-premises and cloud environments. You can use Azure Monitor to track pipeline performance, set up alerts for failures or delays, and view detailed logs. Pipeline runs are typically instantiated by passing the arguments to the parameters that are defined in pipelines. At this stage, you can monitor the pipelines and access performance metrics or success rates. For example, an Azure Storage-linked service specifies a connection string to connect to the Azure Storage account. Databricks Jar Activity.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support. Try out Data Factory in Microsoft Fabric , an all-in-one analytics solution for enterprises. Microsoft Fabric covers everything from data movement to data science, real-time analytics, business intelligence, and reporting.

For example, a pipeline can contain a group of activities that ingests data from an Azure blob, and then runs a Hive query on an HDInsight cluster to partition the data. SAP table. Book an appointment. However, on its own, raw data doesn't have the proper context or meaning to provide meaningful insights to analysts, data scientists, or business decision makers. This activity is used to iterate over a collection and executes specified activities in a loop. For a complete walkthrough of creating this pipeline, see Quickstart: create a Data Factory. If you want to take a dependency on preview connectors in your solution, contact Azure support. Couchbase Preview. The first step in building an information production system is to connect to all the required sources of data and processing, such as software-as-a-service SaaS services, databases, file shares, and FTP web services. Contact us today to learn more about our course offerings and certification programs. Activity Dependency defines how subsequent activities depend on previous activities, determining the condition of whether to continue executing the next task. They have the following top-level structure:. The If Condition activity provides the same functionality that an if statement provides in programming languages. Synapse will display the pipeline editor where you can find: All activities that can be used within the pipeline. To extract insights, it hopes to process the joined data by using a Spark cluster in the cloud Azure HDInsight , and publish the transformed data into a cloud data warehouse such as Azure Synapse Analytics to easily build a report on top of it.

I congratulate, this remarkable idea is necessary just by the way