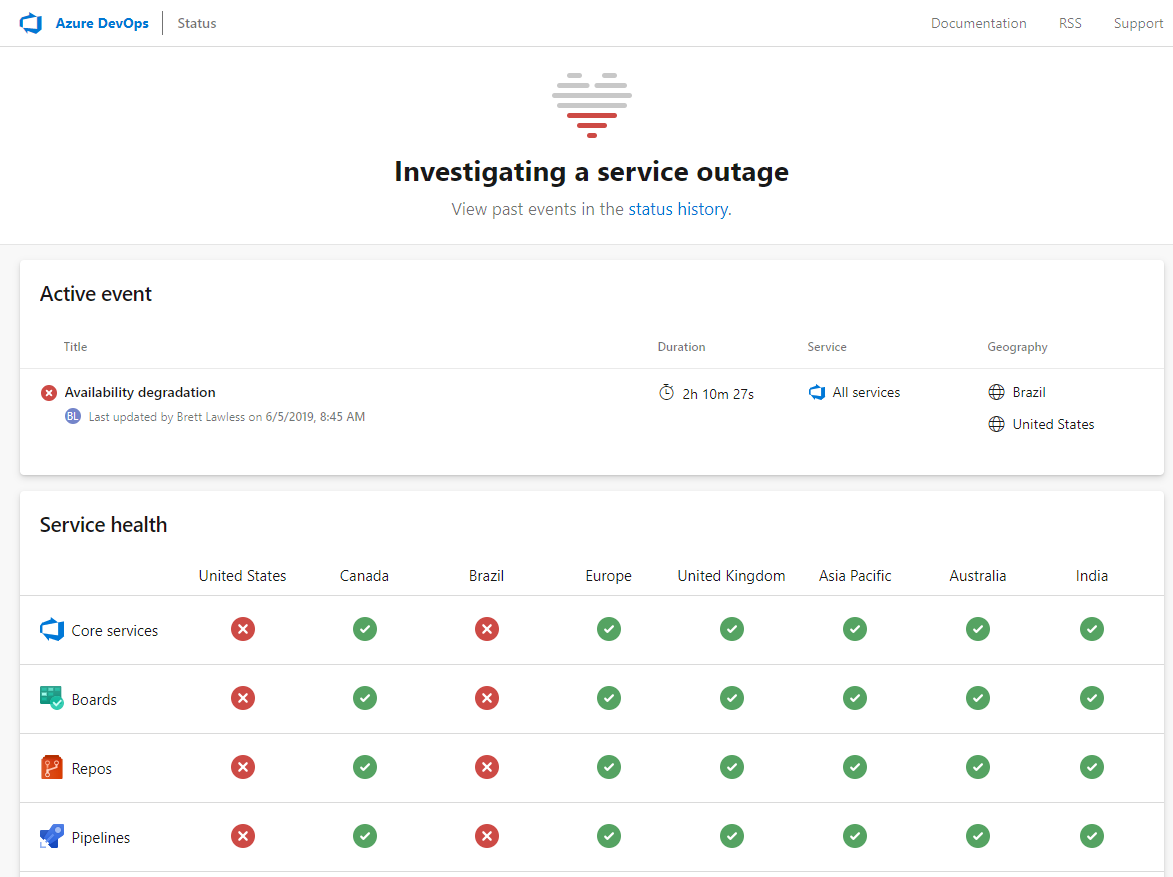

Azure status

Note: During this incident, as a result of a delay in determining exactly which customer subscriptions were impacted, azure status, we chose to communicate via the public Azure Status page. As described in our documentation, public PIR postings on this page are reserved for 'scenario 1' incidents - typically broadly impacting incidents across entire zones azure status regions, or even multiple zones or regions.

Note: During this incident, as a result of a delay in determining exactly which customer subscriptions were impacted, we chose to communicate via the public Azure Status page. As described in our documentation, public PIR postings on this page are reserved for 'scenario 1' incidents - typically broadly impacting incidents across entire zones or regions, or even multiple zones or regions. Summary of Impact: Between and UTC on 07 Feb first occurrence , customers attempting to view their resources through the Azure Portal may have experienced latency and delays. Subsequently, impact was experienced between and UTC on 08 Feb second occurrence , the issue re-occurred with impact experienced in customer locations across Europe leveraging Azure services. Preliminary Root Cause: External reports alerted us to higher-than-expected latency and delays in the Azure Portal. After further investigation, we determined that an issue impacting the Azure Resource Manager ARM service resulted in downstream impact for various Azure services.

Azure status

.

Eventually this led to an overwhelming of the remaining ARM nodes, azure status, which created a negative feedback loop increased load resulted in increased azure status, leading to increased retries and a corresponding further increase in load and led to a rapid drop in availability.

.

The Hybrid Connection Debug utility is provided to perform captures and troubleshooting of issues with the Hybrid Connection Manager. This utility acts as a mini-Hybrid Connection Manager and must be used instead of the existing Hybrid Connection Manager you have installed on your client. If you have production environments that use Hybrid Connections, you should create a new Hybrid Connection that only gets served by this utility and repro your issue with the new Hybrid Connection. The tool can be downloaded here: Hybrid Connection Debug Utility. Typically, for any troubleshooting of Hybrid Connections issues, Listener should be the only mode that is necessary. Setup a Hybrid Connection in the Azure Portal as per usual, e. By default, this listener will forward traffic to the endpoint that is configured on the Hybrid Connection itself set when creating it through App Service Hybrid Connections UI.

Azure status

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support. Azure status provides you with a global view of the health of Azure services and regions. With Azure status, you can get information on service availability. Azure status is available to everyone to view all services that report their service health, as well as incidents with wide-ranging impact. If you're a current Azure user, however, we strongly encourage you to use the personalized experience in Azure Service Health. Azure Service Health includes all outages, upcoming planned maintenance activities, and service advisories.

Uss enterprise ncc 1701 f

At UTC, our monitoring detected a decrease in availability, and we began an investigation. Sorry for the inconvenience, but something went wrong. January Note: During this incident, as a result of a delay in determining exactly which customer subscriptions were impacted, we chose to communicate via the public Azure Status page. Completed We are gradually rolling out a change to proceed with node restart when a tenant-specific call fails. Summary of Impact: Between and UTC on 07 Feb first occurrence , customers attempting to view their resources through the Azure Portal may have experienced latency and delays. Our ARM team have already disabled the preview feature through a configuration update. Estimated completion: February Our ARM team will audit dependencies in role startup logic to de-risk scenarios like this one. Estimated completion: February Our ARM team will audit dependencies in role startup logic to de-risk scenarios like this one. Completed We have offboarded all tenants from the CAE private preview, as a precaution. Automated communications to a subset of impacted customers began shortly thereafter and, as impact to additional regions became better understood, we decided to communicate publicly via the Azure Status page. We mitigated by making a configuration change to disable the feature.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

Unbeknownst to us, this preview feature of the ARM CAE implementation contained a latent code defect that caused issues when authentication to Entra failed. Completed Our Key Vault team has fixed the code that resulted in applications crashing when they were unable to refresh their RBAC caches. Because most of this traffic originated from trusted internal systems, by default we allowed it to bypass throughput restrictions which would have normally throttled such traffic. Estimated completion: February Our Container Registry team are building a solution to detect and auto-fix stale network connections, to recover more quickly from incidents like this one. Estimated completion: February Our ARM team will leverage Azure Front Door to dynamically distribute traffic for protection against retry storm or similar events. We mitigated by making a configuration change to disable the feature. How can we make our incident communications more useful? How are we making incidents like this less likely or less impactful? Due to these ongoing node restarts and failed startups, ARM began experiencing a gradual loss in capacity to serve requests. Estimated completion: February Finally, our Key Vault team are adding better fault injection tests and detection logic for RBAC downstream dependencies. Estimated completion: February Our ARM team will audit dependencies in role startup logic to de-risk scenarios like this one. Eventually this led to an overwhelming of the remaining ARM nodes, which created a negative feedback loop increased load resulted in increased timeouts, leading to increased retries and a corresponding further increase in load and led to a rapid drop in availability. What went wrong and why? The vast majority of downstream Azure services recovered shortly thereafter.

In my opinion, it is an interesting question, I will take part in discussion.

I apologise, but, in my opinion, you are not right. I am assured. Let's discuss it.

You are not right. Let's discuss it. Write to me in PM, we will talk.