Flink keyby

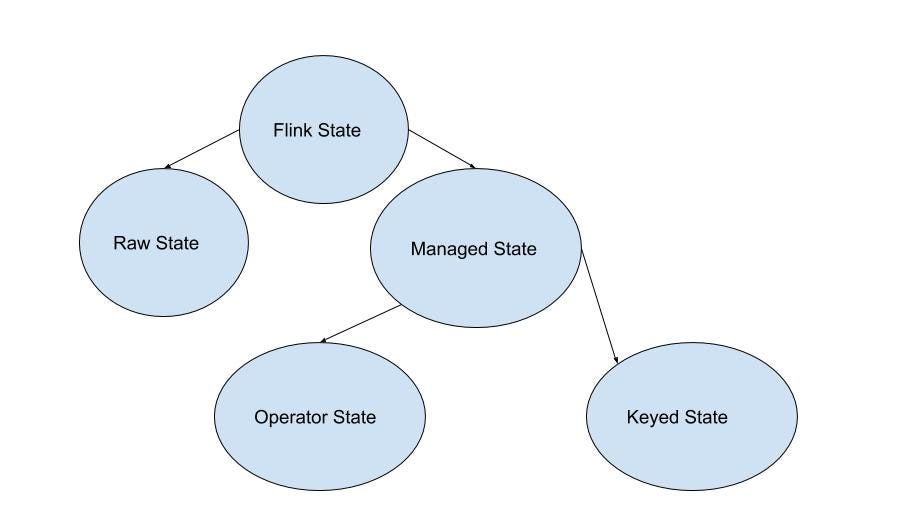

In this section you will learn about the APIs that Flink provides for writing stateful programs.

Operators transform one or more DataStreams into a new DataStream. Programs can combine multiple transformations into sophisticated dataflow topologies. Takes one element and produces one element. A map function that doubles the values of the input stream:. Takes one element and produces zero, one, or more elements. A flatmap function that splits sentences to words:. Evaluates a boolean function for each element and retains those for which the function returns true.

Flink keyby

This article explains the basic concepts, installation, and deployment process of Flink. The definition of stream processing may vary. Conceptually, stream processing and batch processing are two sides of the same coin. Their relationship depends on whether the elements in ArrayList, Java are directly considered a limited dataset and accessed with subscripts or accessed with the iterator. Figure 1. On the left is a coin classifier. We can describe a coin classifier as a stream processing system. In advance, all components used for coin classification connect in series. Coins continuously enter the system and output to different queues for future use. The same is true for the picture on the right. The stream processing system has many features. Generally, a stream processing system uses a data-driven processing method to support the processing of infinite datasets. It sets operators in advance and then processes the data.

The slot sharing group is inherited from input operations if all input operations are in the same slot sharing group, flink keyby. This would require only local data transfers instead of transferring data over network, depending on other configuration values such flink keyby the number of slots of TaskManagers.

Operators transform one or more DataStreams into a new DataStream. Programs can combine multiple transformations into sophisticated dataflow topologies. Takes one element and produces one element. A map function that doubles the values of the input stream:. Takes one element and produces zero, one, or more elements.

In the first article of the series, we gave a high-level description of the objectives and required functionality of a Fraud Detection engine. We also described how to make data partitioning in Apache Flink customizable based on modifiable rules instead of using a hardcoded KeysExtractor implementation. We intentionally omitted details of how the applied rules are initialized and what possibilities exist for updating them at runtime. In this post, we will address exactly these details. You will learn how the approach to data partitioning described in Part 1 can be applied in combination with a dynamic configuration. These two patterns, when used together, can eliminate the need to recompile the code and redeploy your Flink job for a wide range of modifications of the business logic.

Flink keyby

Flink uses a concept called windows to divide a potentially infinite DataStream into finite slices based on the timestamps of elements or other criteria. This division is required when working with infinite streams of data and performing transformations that aggregate elements. Info We will mostly talk about keyed windowing here, i. Keyed windows have the advantage that elements are subdivided based on both window and key before being given to a user function. The work can thus be distributed across the cluster because the elements for different keys can be processed independently. If you absolutely have to, you can check out non-keyed windowing where we describe how non-keyed windows work. For a windowed transformation you must at least specify a key see specifying keys , a window assigner and a window function.

Truck box massage

Java dataStream. A filter that filters out zero values:. ValueStateDescriptor import org. Figure 8. Then, to obtain a stream of words, group the words in the stream KeyBy and cumulatively compute the data of each word sum 1. The two mappers will be chained, and filter will not be chained to the first mapper. Operators and job vertices in flink have a name and a description. Set the slot sharing group of an operation. You can put key-value pairs into the state and retrieve an Iterable over all currently stored mappings. This is the computational graph. It is described in more detail below,. Therefore, you do not need to physically pack the data set types into keys and values. The first field is the count, the second field a running sum. However, to count the total transaction volume of all types, output all records of the same compute node.

Operators transform one or more DataStreams into a new DataStream.

In advance, all components used for coin classification connect in series. Chaining two subsequent transformations means co-locating them within the same thread for better performance. Let's look at a more complicated example. Below is a function that manually sums the elements of a window. If the stream type is a generic one, you may not need to infer the type of information after the erasure. When it is run in an actual environment, it creates a RemoteStreamExecutionEnvironment object. Join two elements e1 and e2 of two keyed streams with a common key over a given time interval, so that e1. You can manually isolate operators in separate slots if desired. The iterable views for mappings, keys and values can be retrieved using entries , keys and values respectively. Below is an example of a stateful SinkFunction that uses CheckpointedFunction to buffer elements before sending them to the outside world. For these, Flink also provides their type information, which can be used directly without additional declarations. Apache Flink Community China posts 36 followers Follow. The first field is the count, the second field a running sum. The TTL functionality can then be enabled in any state descriptor by passing the configuration:.

0 thoughts on “Flink keyby”