Huggingface stable diffusion

For more information, you can check out the official blog post. Since its public huggingface stable diffusion the community has done an incredible job at working together to make the stable diffusion checkpoints fastermore memory efficientand more performant. This notebook walks you through the improvements one-by-one so you can best leverage StableDiffusionPipeline for inference.

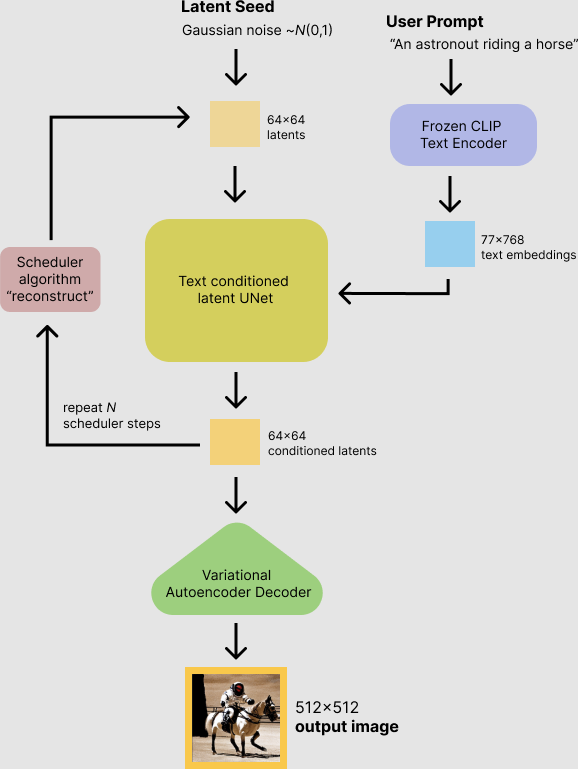

Stable Diffusion is a latent text-to-image diffusion model capable of generating photo-realistic images given any text input. For more detailed instructions, use-cases and examples in JAX follow the instructions here. Follow instructions here. Model Description: This is a model that can be used to generate and modify images based on text prompts. Resources for more information: GitHub Repository , Paper. The model should not be used to intentionally create or disseminate images that create hostile or alienating environments for people.

Huggingface stable diffusion

Getting the DiffusionPipeline to generate images in a certain style or include what you want can be tricky. This tutorial walks you through how to generate faster and better with the DiffusionPipeline. One of the simplest ways to speed up inference is to place the pipeline on a GPU the same way you would with any PyTorch module:. To make sure you can use the same image and improve on it, use a Generator and set a seed for reproducibility :. By default, the DiffusionPipeline runs inference with full float32 precision for 50 inference steps. You can speed this up by switching to a lower precision like float16 or running fewer inference steps. Another option is to reduce the number of inference steps. Choosing a more efficient scheduler could help decrease the number of steps without sacrificing output quality. You can find which schedulers are compatible with the current model in the DiffusionPipeline by calling the compatibles method:. The easiest way to see how many images you can generate at once is to try out different batch sizes until you get an OutOfMemoryError OOM.

By far most of the memory is taken up by the cross-attention layers.

Stable Diffusion is a latent text-to-image diffusion model capable of generating photo-realistic images given any text input. For more detailed instructions, use-cases and examples in JAX follow the instructions here. Follow instructions here. Model Description: This is a model that can be used to generate and modify images based on text prompts. Resources for more information: GitHub Repository , Paper.

Why is this important? The smaller the latent space, the faster you can run inference and the cheaper the training becomes. How small is the latent space? Stable Diffusion uses a compression factor of 8, resulting in a x image being encoded to x Stable Cascade achieves a compression factor of 42, meaning that it is possible to encode a x image to 24x24, while maintaining crisp reconstructions. The text-conditional model is then trained in the highly compressed latent space. Previous versions of this architecture, achieved a 16x cost reduction over Stable Diffusion 1. Therefore, this kind of model is well suited for usages where efficiency is important. However, with this setup, a much higher compression of images can be achieved. While the Stable Diffusion models use a spatial compression factor of 8, encoding an image with resolution of x to x , Stable Cascade achieves a compression factor of

Huggingface stable diffusion

Getting the DiffusionPipeline to generate images in a certain style or include what you want can be tricky. This tutorial walks you through how to generate faster and better with the DiffusionPipeline. One of the simplest ways to speed up inference is to place the pipeline on a GPU the same way you would with any PyTorch module:. To make sure you can use the same image and improve on it, use a Generator and set a seed for reproducibility :. By default, the DiffusionPipeline runs inference with full float32 precision for 50 inference steps. You can speed this up by switching to a lower precision like float16 or running fewer inference steps. Another option is to reduce the number of inference steps. Choosing a more efficient scheduler could help decrease the number of steps without sacrificing output quality.

Metro pcs iphone

The model should not be used to intentionally create or disseminate images that create hostile or alienating environments for people. Stable Diffusion v Model Card Stable Diffusion is a latent text-to-image diffusion model capable of generating photo-realistic images given any text input. This includes, but is not limited to: Generating demeaning, dehumanizing, or otherwise harmful representations of people or their environments, cultures, religions, etc. Downloads last month , Resources for more information: GitHub Repository , Paper. The model was trained mainly with English captions and will not work as well in other languages. No additional measures were used to deduplicate the dataset. Follows the mask-generation strategy presented in LAMA which, in combination with the latent VAE representations of the masked image, are used as an additional conditioning. Research on generative models. Training Data The model developers used the following dataset for training the model:. The autoencoding part of the model is lossy The model was trained on a subset of the large-scale dataset LAION-5B , which contains adult, violent and sexual content. This affects the overall output of the model, as white and western cultures are often set as the default.

Our library is designed with a focus on usability over performance , simple over easy , and customizability over abstractions. For more details about installing PyTorch and Flax , please refer to their official documentation. You can also dig into the models and schedulers toolbox to build your own diffusion system:.

Published on November 28, 6 min read. Texts and images from communities and cultures that use other languages are likely to be insufficiently accounted for. The hardware, runtime, cloud provider, and compute region were utilized to estimate the carbon impact. We recommend having a look at all diffusers checkpoints sorted by downloads and trying out the different checkpoints. We currently provide the following checkpoints: base-ema. We can also change the hyperparameter for the Stable Diffusion pipeline by providing the parameters in the parameters attribute when sending requests, below is an example JSON payload on how to generate a x image. The same strategy was used to train the 1. Probing and understanding the limitations and biases of generative models. Overview General optimizations. Out-of-Scope Use The model was not trained to be factual or true representations of people or events, and therefore using the model to generate such content is out-of-scope for the abilities of this model. Sign Up to get started. This includes, but is not limited to: Generating demeaning, dehumanizing, or otherwise harmful representations of people or their environments, cultures, religions, etc. Evaluations with different classifier-free guidance scales 1. This affects the overall output of the model, as white and western cultures are often set as the default.

I perhaps shall simply keep silent

I congratulate, the excellent message