Io confluent kafka serializers kafkaavroserializer

You are viewing documentation for an older version of Confluent Platform.

Video courses covering Apache Kafka basics, advanced concepts, setup and use cases, and everything in between. Whichever method you choose for your application, the most important factor is to ensure that your application is coordinating with Schema Registry to manage schemas and guarantee data compatibility. There are two ways to interact with Kafka: using a native client for your language combined with serializers compatible with Schema Registry, or using the REST Proxy. Most commonly you will use the serializers if your application is developed in a language with supported serializers, whereas you would use the REST Proxy for applications written in other languages. Java applications can use the standard Kafka producers and consumers, but will substitute the default ByteArraySerializer with io.

Io confluent kafka serializers kafkaavroserializer

Video courses covering Apache Kafka basics, advanced concepts, setup and use cases, and everything in between. Support for these new serialization formats is not limited to Schema Registry, but provided throughout Confluent Platform. Additionally, Schema Registry is extensible to support adding custom schema formats as schema plugins. The serializers can automatically register schemas when serializing a Protobuf message or a JSON-serializable object. The Protobuf serializer can recursively register all imported schemas,. The serializers and deserializers are available in multiple languages, including Java,. NET and Python. Schema Registry supports multiple formats at the same time. For example, you can have Avro schemas in one subject and Protobuf schemas in another. Furthermore, both Protobuf and JSON Schema have their own compatibility rules, so you can have your Protobuf schemas evolve in a backward or forward compatible manner, just as with Avro. Schema Registry in Confluent Platform also supports for schema references in Protobuf by modeling the import statement. The following schema formats are supported out-of-the box with Confluent Platform, with serializers, deserializers, and command line tools available for each format:. Use the serializer and deserializer for your schema format. Specify the serializer in the code for the Kafka producer to send messages, and specify the deserializer in the code for the Kafka consumer to read messages. The new Protobuf and JSON Schema serializers and deserializers support many of the same configuration properties as the Avro equivalents, including subject name strategies for the key and value.

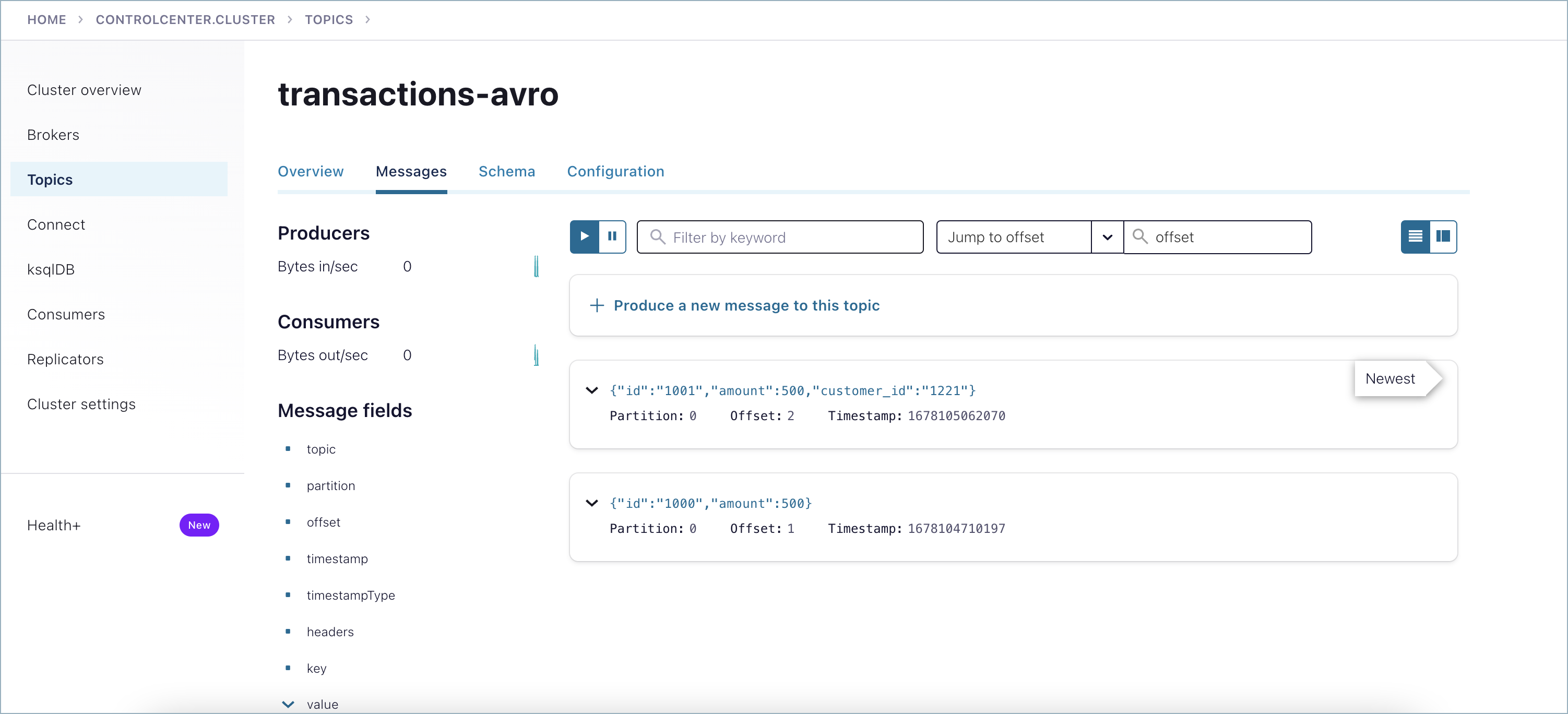

Open a new terminal window, and use the consumer to read from topic transactions-avro and get the value of the message in JSON. For example: confluent.

Video courses covering Apache Kafka basics, advanced concepts, setup and use cases, and everything in between. The Confluent Schema Registry based Avro serializer, by design, does not include the message schema; but rather, includes the schema ID in addition to a magic byte followed by the normal binary encoding of the data itself. You can choose whether or not to embed a schema inline; allowing for cases where you may want to communicate the schema offline, with headers, or some other way. This is in contrast to other systems, such as Hadoop, that always include the schema with the message data. To learn more, see Wire format. Typically, IndexedRecord is used for the value of the Kafka message. If used, the key of the Kafka message is often one of the primitive types mentioned above.

Register Now. To put real-time data to work, event streaming applications rely on stream processing, Kafka Connect allows developers to capture events from end systems.. By leveraging Kafka Streams API as well, developers can build pipelines that both ingest and transform streaming data for application consumption, designed with data stream format and serialization in mind. Download the white paper to explore five examples of different data formats, SerDes combinations connector configurations and Kafka Streams code for building event streaming pipelines:. Yeva is an integration architect at Confluent designing solutions and building demos for developers and operators of Apache Kafka. She has many years of experience validating and optimizing end-to-end solutions for distributed software systems and networks. Login Contact Us.

Io confluent kafka serializers kafkaavroserializer

What is the simplest way to write messages to and read messages from Kafka, using de serializers and Schema Registry? Next, create the following docker-compose. Your first step is to create a topic to produce to and consume from. Use the following command to create the topic:. We are going to use Schema Registry to control our record format. The first step is creating a schema definition which we will use when producing new records. From the same terminal you used to create the topic above, run the following command to open a terminal on the broker container:.

Can you play online with a hacked ps vita

Avro defines both a binary serialization format and a JSON serialization format. In this case, it is more useful to keep an ordered sequence of related messages together that play a part in a chain of events, regardless of topic names. The array [1, 0] is reading the array backwards the first nested message type of the second top-level message type, corresponding to test. On this page:. This behavior can be modified by using the following configs: key. Use the default subject naming strategy , TopicNameStrategy , which uses the topic name to determine the subject to be used for schema lookups, and helps to enforce subject-topic constraints. Most commonly you will use the serializers if your application is developed in a language with supported serializers, whereas you would use the REST Proxy for applications written in other languages. Learn Pick your learning path. Check if there are enough command-line arguments to run the program and then parse the arguments. All other trademarks, servicemarks, and copyrights are the property of their respective owners. Additionally, it includes the schema IDs it registered or looked up in Schema Registry. Schema; import org. In order to ensure there is no variation even as the serializers are updated with new formats, the serializers are very conservative when updating output formats. If latest.

Programming in Python. Dive into the Python ecosystem to learn about popular libraries, tools, modules, and more.

Any changes made will be fully backward compatible with documentation in release notes and at least one version of warning provided if it introduces a new serialization feature that requires additional downstream support. KafkaJsonSchemaDeserializer Use the serializer and deserializer for your schema format. Fprintf os. It is a convenient, language-agnostic method for interacting with Kafka. This strategy allows a topic to contain a mixture of different record types, since no intra-topic compatibility checking is performed. InputStream; import java. For Protobuf, the message name. We recommend these values be set using a properties file that your application loads and passes to the producer constructor. In future requests, you can use this schema ID instead of the full schema, reducing the overhead for each request. This brief overview should get you started, and you can find more detailed information in the Formats, Serializers, and Deserializers section of the Schema Registry documentation. Customer", "io. If used, the key of the Kafka message is often of one of the primitive types. For example, given a Schema Registry entry with the following definition:. KafkaJsonSchemaSerializer io. Confluent Platform provides full support for the notion of schema references , the ability of a schema to refer to other schemas.

I consider, that you commit an error. I can defend the position. Write to me in PM, we will talk.

Certainly. I agree with told all above. Let's discuss this question.