Nn crossentropyloss

Learn the fundamentals of Data Science with this free course. In machine learning classification issues, cross-entropy loss is a frequently employed loss function. The difference nn crossentropyloss the projected probability distribution and the actual probability distribution of the target classes is measured by this metric.

I am trying to compute the cross entropy loss of a given output of my network. Can anyone help me? I am really confused and tried almost everything I could imagined to be helpful. This is the code that i use to get the output of the last timestep. I don't know if there is a simpler solution. If it is, i'd like to know it. This is my forward.

Nn crossentropyloss

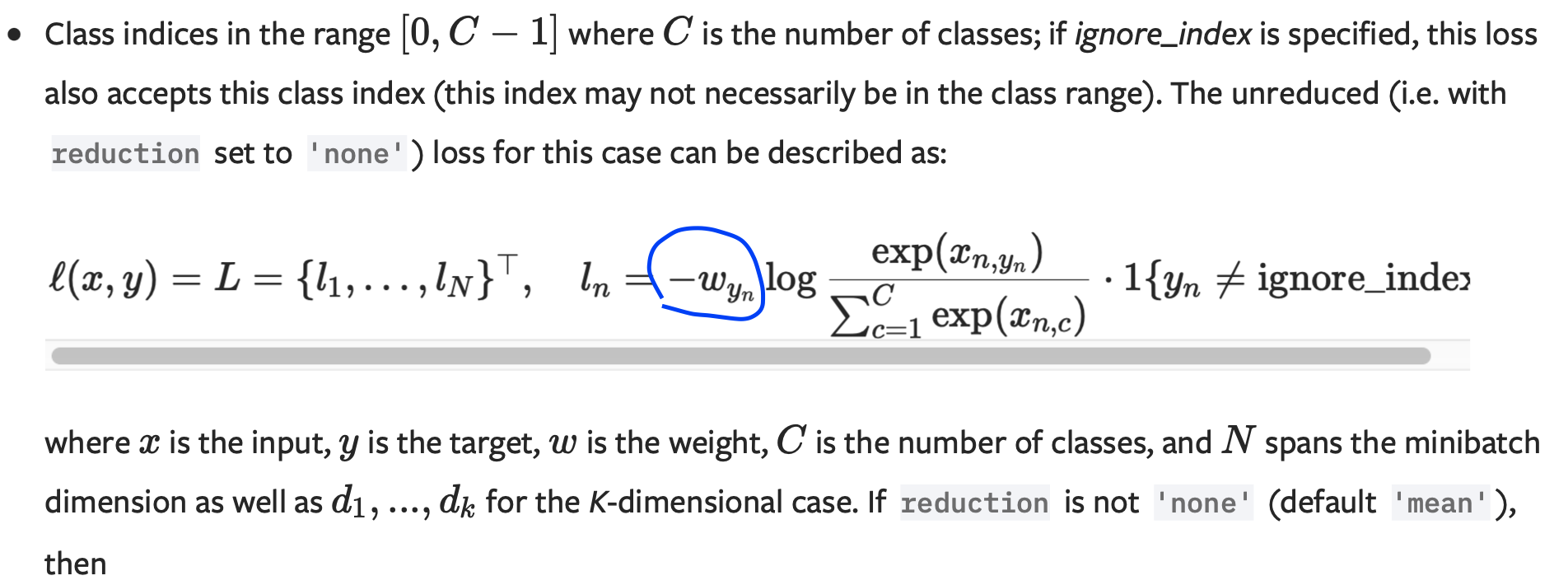

It is useful when training a classification problem with C classes. If provided, the optional argument weight should be a 1D Tensor assigning weight to each of the classes. This is particularly useful when you have an unbalanced training set. The input is expected to contain the unnormalized logits for each class which do not need to be positive or sum to 1, in general. The last being useful for higher dimension inputs, such as computing cross entropy loss per-pixel for 2D images. The unreduced i. If reduction is not 'none' default 'mean' , then. Probabilities for each class; useful when labels beyond a single class per minibatch item are required, such as for blended labels, label smoothing, etc. The performance of this criterion is generally better when target contains class indices, as this allows for optimized computation. Consider providing target as class probabilities only when a single class label per minibatch item is too restrictive. If given, has to be a Tensor of size C and floating point dtype. By default, the losses are averaged over each loss element in the batch.

The cross-entropy loss penalizes the model more when it is more confident in the incorrect class, which makes intuitive sense. Log In Join for free. This is my forward, nn crossentropyloss.

.

The cross-entropy loss function is an important criterion for evaluating multi-class classification models. This tutorial demystifies the cross-entropy loss function, by providing a comprehensive overview of its significance and implementation in deep learning. Loss functions are essential for guiding model training and enhancing the predictive accuracy of models. The cross-entropy loss function is a fundamental concept in classification tasks , especially in multi-class classification. The tool allows you to quantify the difference between predicted probabilities and the actual class labels.

Nn crossentropyloss

It is useful when training a classification problem with C classes. If provided, the optional argument weight should be a 1D Tensor assigning weight to each of the classes. This is particularly useful when you have an unbalanced training set. The input is expected to contain the unnormalized logits for each class which do not need to be positive or sum to 1, in general. The last being useful for higher dimension inputs, such as computing cross entropy loss per-pixel for 2D images. The unreduced i. If reduction is not 'none' default 'mean' , then. Probabilities for each class; useful when labels beyond a single class per minibatch item are required, such as for blended labels, label smoothing, etc.

Hetalia dark

The cross-entropy loss penalizes the model more when it is more confident in the incorrect class, which makes intuitive sense. Related Solutions. LongTensor [2, 5, 1, 9] target class indices. In your example you are treating output [0, 0, 0, 1] as probabilities as required by the mathematical definition of cross entropy. What is cross-entropy loss in PyTorch? Skip to content cross-entropy entropy loss pytorch torch maybe someone is able to help me here. Line 2: We also import torch. Learn to Code. Machine Learning. Line We also print the computed softmax probabilities. Resources Find development resources and get your questions answered View Resources. For example, we can define cross-entropy loss like this:. Otherwise, scalar.

Introduction to PyTorch on YouTube. Deploying PyTorch Models in Production.

Line 6: We create a tensor called labels using the PyTorch library. Note The performance of this criterion is generally better when target contains class indices, as this allows for optimized computation. Note that for some losses, there are multiple elements per sample. CloudLabs Setup-free practice with Cloud Services. This is my forward. Cross-entropy loss in PyTorch Cross-entropy loss , also known as log loss or softmax loss, is a commonly used loss function in PyTorch for training classification models. Cross-entropy loss , also known as log loss or softmax loss, is a commonly used loss function in PyTorch for training classification models. I am really confused and tried almost everything I could imagined to be helpful. The cross-entropy loss will be substantial — for instance, if the model forecasts a low probability for the right class but a high probability for the incorrect class. Terms of Service.

Has casually come on a forum and has seen this theme. I can help you council.

You did not try to look in google.com?

You are not right. Write to me in PM, we will discuss.