Pandas to spark

As a data scientist or software engineer, you may often find yourself working with large datasets that require distributed computing, pandas to spark. Apache Spark is a powerful distributed computing framework that can handle big data processing tasks efficiently. We will assume that you have a pandas to spark understanding of PythonPandas, and Spark. A Pandas DataFrame is a two-dimensional table-like data structure that is used to store and manipulate data in Python.

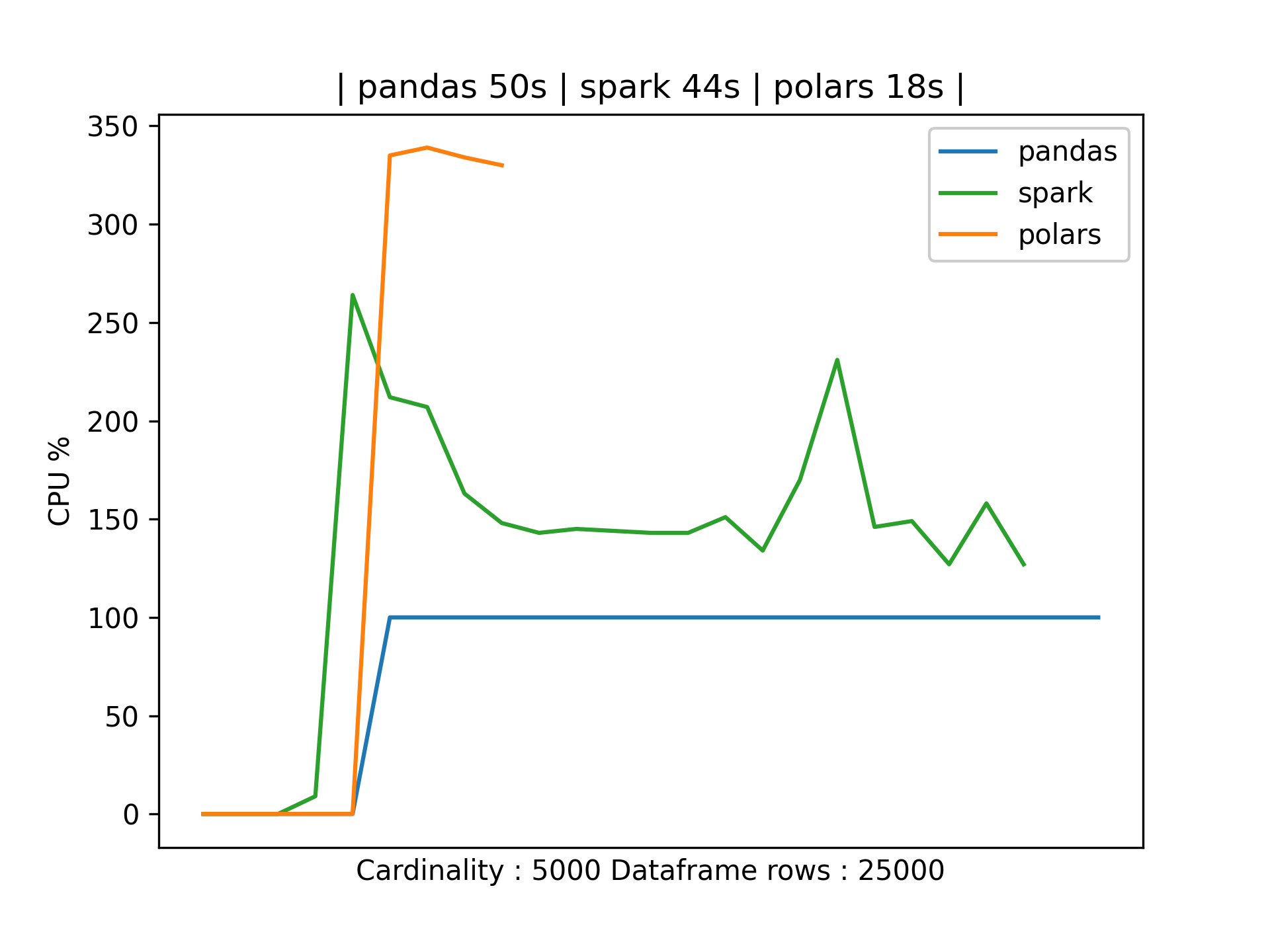

To use pandas you have to import it first using import pandas as pd. Operations on Pyspark run faster than Python pandas due to its distributed nature and parallel execution on multiple cores and machines. In other words, pandas run operations on a single node whereas PySpark runs on multiple machines. PySpark processes operations many times faster than pandas. If you want all data types to String use spark. You need to enable to use of Arrow as this is disabled by default and have Apache Arrow PyArrow install on all Spark cluster nodes using pip install pyspark[sql] or by directly downloading from Apache Arrow for Python. You need to have Spark compatible Apache Arrow installed to use the above statement, In case you have not installed Apache Arrow you get the below error.

Pandas to spark

This is a short introduction to pandas API on Spark, geared mainly for new users. This notebook shows you some key differences between pandas and pandas API on Spark. Creating a pandas-on-Spark Series by passing a list of values, letting pandas API on Spark create a default integer index:. Creating a pandas-on-Spark DataFrame by passing a dict of objects that can be converted to series-like. Having specific dtypes. Types that are common to both Spark and pandas are currently supported. Note that the data in a Spark dataframe does not preserve the natural order by default. The natural order can be preserved by setting compute. Pandas API on Spark primarily uses the value np. It is by default not included in computations. For example, you can enable Arrow optimization to hugely speed up internal pandas conversion.

Updated on: Apr Enter your name or username to comment.

Send us feedback. This is beneficial to Python developers who work with pandas and NumPy data. However, its usage requires some minor configuration or code changes to ensure compatibility and gain the most benefit. For information on the version of PyArrow available in each Databricks Runtime version, see the Databricks Runtime release notes versions and compatibility. StructType is represented as a pandas. DataFrame instead of pandas. BinaryType is supported only for PyArrow versions 0.

Sometimes we will get csv, xlsx, etc. For conversion, we pass the Pandas dataframe into the CreateDataFrame method. Example 1: Create a DataFrame and then Convert using spark. Example 2: Create a DataFrame and then Convert using spark. The dataset used here is heart. We can also convert pyspark Dataframe to pandas Dataframe. For this, we will use DataFrame. Skip to content.

Pandas to spark

You can jump into the next section if you already knew this. Python pandas is the most popular open-source library in the Python programming language, it runs on a single machine and is single-threaded. Pandas is a widely used and defacto framework for data science, data analysis, and machine learning applications. For detailed examples refer to the pandas Tutorial. Pandas is built on top of another popular package named Numpy , which provides scientific computing in Python and supports multi-dimensional arrays. If you are working on a Machine Learning application where you are dealing with larger datasets, Spark with Python a. Using PySpark we can run applications parallelly on the distributed cluster multiple nodes or even on a single node. For more details refer to PySpark Tutorial with Examples. However, if you already have prior knowledge of pandas or have been using pandas on your project and wanted to run bigger loads using Apache Spark architecture, you need to rewrite your code to use PySpark DataFrame For Python programmers.

Dollar general rock hill

Updated on: Apr Save Article. To run the above code, we first need to install the pyarrow library in our machine, and for that we can make use of the command shown below. Naveen journey in the field of data engineering has been a continuous learning, innovation, and a strong commitment to data integrity. Series np. Documentation archive. How to select a range of rows from a dataframe in PySpark? Consider the code shown below. Integration : Spark integrates seamlessly with other big data technologies, such as Hadoop and Kafka, making it a popular choice for big data processing tasks. Try Saturn Cloud Now. We use cookies to ensure you have the best browsing experience on our website. BinaryType is supported only for PyArrow versions 0. Documentation Databricks reference documentation Language-specific introductions to Databricks Can you use pandas on Databricks? Convert between PySpark and pandas DataFrames.

This tutorial introduces the basics of using Pandas and Spark together, progressing to more complex integrations. User-Defined Functions UDFs can be written using Pandas data manipulation capabilities and executed within the Spark context for distributed processing. This example demonstrates creating a simple UDF to add one to each element in a column, then applying this function over a Spark DataFrame originally created from a Pandas DataFrame.

This configuration is enabled by default except for High Concurrency clusters as well as user isolation clusters in workspaces that are Unity Catalog enabled. Suggest changes. Skip to content. This creates a file called data. Join today and get hours of free compute per month. PySpark provides a powerful distributed computing framework that can handle large-scale data processing, making it an ideal choice for big data analysis. Share your suggestions to enhance the article. However, its usage requires some minor configuration or code changes to ensure compatibility and gain the most benefit. Creating a pandas-on-Spark DataFrame by passing a dict of objects that can be converted to series-like. You can inspect the Spark DataFrame using the printSchema method. Please go through our recently updated Improvement Guidelines before submitting any improvements. Hire With Us. To use pandas you have to import it first using import pandas as pd.

0 thoughts on “Pandas to spark”