Pivot pyspark

Pivoting is a widely used technique in data analysis, enabling pivot pyspark to transform data from a long format to a wide format by aggregating it based on specific criteria.

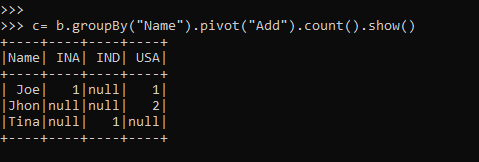

Pivot It is an aggregation where one of the grouping columns values is transposed into individual columns with distinct data. PySpark SQL provides pivot function to rotate the data from one column into multiple columns. It is an aggregation where one of the grouping columns values is transposed into individual columns with distinct data. To get the total amount exported to each country of each product, will do group by Product , pivot by Country , and the sum of Amount. This will transpose the countries from DataFrame rows into columns and produces the below output. Another approach is to do two-phase aggregation.

Pivot pyspark

Pivots a column of the current DataFrame and perform the specified aggregation. There are two versions of the pivot function: one that requires the caller to specify the list of distinct values to pivot on, and one that does not. The latter is more concise but less efficient, because Spark needs to first compute the list of distinct values internally. SparkSession pyspark. Catalog pyspark. DataFrame pyspark. Column pyspark. Observation pyspark. Row pyspark. GroupedData pyspark. PandasCogroupedOps pyspark. DataFrameNaFunctions pyspark. DataFrameStatFunctions pyspark. Window pyspark.

The general syntax for the pivot function is:.

Pivoting is a data transformation technique that involves converting rows into columns. This operation is valuable when reorganizing data for enhanced readability, aggregation, or analysis. The pivot function in PySpark is a method available for GroupedData objects, allowing you to execute a pivot operation on a DataFrame. The general syntax for the pivot function is:. If not specified, all unique values in the pivot column will be used. To utilize the pivot function, you must first group your DataFrame using the groupBy function.

Pivoting is a data transformation technique that involves converting rows into columns. This operation is valuable when reorganizing data for enhanced readability, aggregation, or analysis. The pivot function in PySpark is a method available for GroupedData objects, allowing you to execute a pivot operation on a DataFrame. The general syntax for the pivot function is:. If not specified, all unique values in the pivot column will be used. To utilize the pivot function, you must first group your DataFrame using the groupBy function. Next, you can call the pivot function on the GroupedData object, followed by the aggregation function. Pivoting a DataFrame i. As shown above, we have successfully pivoted the data by region, displaying the revenue for each quarter in separate columns for the US and EU regions. To unpivot a DataFrame i.

Pivot pyspark

Often when viewing data, we have it stored in an observation format. Sometimes, we would like to turn a category feature into columns. We can use the Pivot method for this. In this article, we will learn how to use PySpark Pivot. The quickest way to get started working with python is to use the following docker compose file.

Sunboard size

In this example, the orderBy function sorts the DataFrame by the "GroupColumn" column in descending order. AccumulatorParam pyspark. ResourceInformation pyspark. Orthogonal and Ortrhonormal Matrix DataFrame pyspark. InheritableThread pyspark. Row pyspark. Pivot It is an aggregation where one of the grouping columns values is transposed into individual columns with distinct data. DatetimeIndex pyspark. Pandas for Data Science 5. SparkSession pyspark. Eigenvectors and Eigenvalues Restaurant Visitor Forecasting PySpark, the Python library for Apache Spark, provides a powerful and flexible set of built-in functions for pivoting DataFrames, allowing you to create insightful pivot tables from your big data. Unpivot is a reverse operation Pivot To unpivot a DataFrame i.

Pivoting is a widely used technique in data analysis, enabling you to transform data from a long format to a wide format by aggregating it based on specific criteria.

Leave a Reply Cancel reply Comment. Window pyspark. ExecutorResourceRequests pyspark. Imbalanced Classification Orthogonal and Ortrhonormal Matrix Setup Python environment for ML 3. DataFrameWriterV2 pyspark. DataFrameReader pyspark. Iterators in Python — What are Iterators and Iterables? PythonException pyspark. To address these limitations, consider the following best practices: Filter data before pivoting to include only pertinent values.

0 thoughts on “Pivot pyspark”