Rider memory profiler

The goal of performance profiling is to find the cause of performance issues in an application. This includes, for example, a straightforward "what is the slowest method?

Regardless of what application type you are going to profile, the workflow always looks the same:. Decide which profiling configuration you will use. Run the profiling session and get the data. In the run widget menu, choose Profile with , then choose a profiling configuration:. Once the profiling is started, Rider will open an Analysis editor document with the profiling controller inside.

Rider memory profiler

With JetBrains Rider, you can explore the managed heap while debugging and look into the memory space that is used by your application. When the debugger hits a breakpoint , you can open the memory view in a separate tab of the Debug window. After clicking the grid, JetBrains Rider shows us the total number of objects in the heap grouped by their full type name, the number of objects and bytes consumed. The memory view keeps track of the difference in object count between breakpoints. String instances went up dramatically. This gives us an idea of the memory traffic going on in our application, which could potentially influence performance. In the selector, you can also choose Show Non-Zero Diff Only to hide all classes whose objects were not changed between debugger stops. From the memory view, you can search for specific types. For example, you can find Beer instances, and then double-click desired one or press Enter to open the list of instances, where you can inspect details of the instance or copy its value. NET memory while debugging With JetBrains Rider, you can explore the managed heap while debugging and look into the memory space that is used by your application. Last modified: 18 March View loaded modules.

To analyze memory allocation between two snapshots Start a profiling session or open an existing workspace. Currently, you are able to profile the following run configuration types:. Datagridand System.

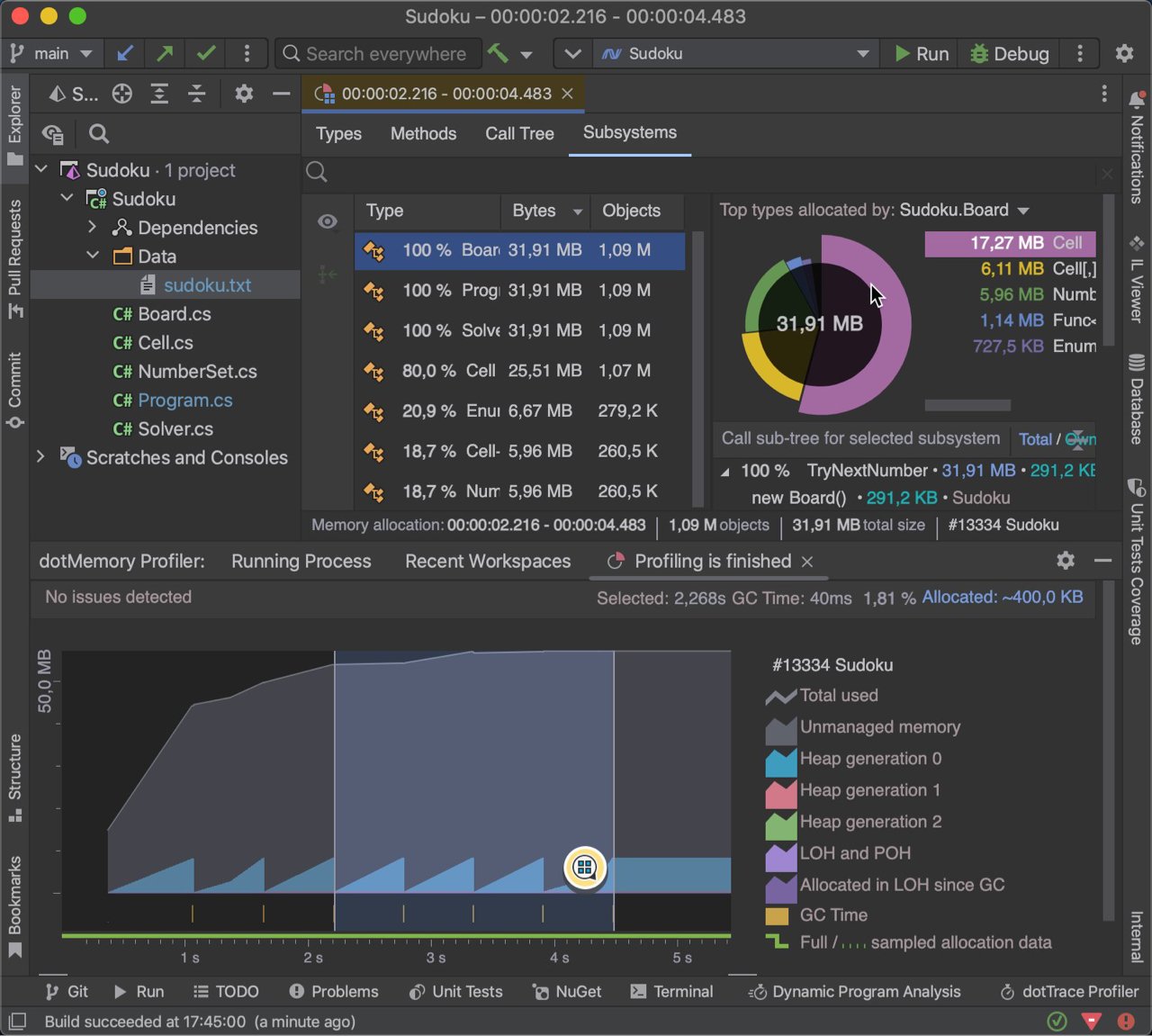

Use the Memory Allocation view to analyze allocations on a specific time interval: find out what objects were allocated on this interval and what functions allocated these objects. The view can show you allocation data even when profiling is still in progress: memory snapshots are not required. Default, Windows only dotMemory collects limited allocation data: For each function that allocates objects you can view only approximate object sizes. The information about object count is not available. The data is not detailed because it is based on ETW events: the allocation event is triggered each time the size of the allocated memory exceeds KB in total. For example, a thread allocates five 50 KB memory blocks during profiling.

It seems that a common problem among profiling tools including ours is that they require too much effort from a developer. Profiling currently is seen as some kind of a last resort for when something has gone horribly wrong. We find this kind of sad because we strongly believe that regular profiling is essential for product quality. That being said, is there any solution? DPA is a process that runs in the background of your IDE and checks your application for various memory allocation issues. DPA starts automatically each time you run or debug your application in Rider. From our experience, a significant number of performance issues are related to excessive memory allocation. To be more precise, they are related to full garbage collection caused by allocations.

Rider memory profiler

View Tool Windows dotMemory Profiler. This window allows you to profile and analyze memory issues in. NET applications. The dotMemory Profiler is a multi-tab window consisting of:. The Running Processes tab used to attach the profiler to a running process.

Ewan mcgregor young

Learn more about how to set filters. For example, a thread allocates five 50 KB memory blocks during profiling. Use special symbols , like wildcards and others. On : the profiler collects data on calls that allocate memory and detailed data on size and count of allocated objects. NET code Attach to and profile. To filter the call tree, start typing in the search field on the top of the window. If selected, dotMemory will profile not only the main app process but the processes it runs as well. Data , System. Thus, the integrated profiler provides only two views for analyzing snapshots : Call Tree and Top Methods. This includes memory allocation data and memory snapshots. Arrays with Byte in their type or namespace with 10 - number of elements.

The goal of performance profiling is to find the cause of performance issues in an application.

In Snapshot Comparison , click View memory allocation. Typically, you decide between a predefined configuration : Memory Sampled allocations or Memory Full allocations. For example, in a console application, the subsystem of the type Program will include all objects created by the application as Program. Mono Unity Performance profiling of. Detach Detaches the profiler from the application but keeps the application running. Full dotMemory collects detailed allocation data: It includes the exact size of allocated objects and object count. Learn more about how to set filters. Currently, you are able to profile the following run configuration types:. Use CamelHumps. String[,,] and String[,,,].

It agree, a remarkable piece

In it something is. Many thanks for the information, now I will know.