Spark read csv

In this tutorial, you will learn how to read a single file, multiple files, all files from a local directory into DataFrame, applying some transformations, and finally writing DataFrame back to CSV file using PySpark example. Using csv "path" or format "csv", spark read csv.

Spark SQL provides spark. Function option can be used to customize the behavior of reading or writing, such as controlling behavior of the header, delimiter character, character set, and so on. Other generic options can be found in Generic File Source Options. Overview Submitting Applications. Dataset ; import org. For reading, decodes the CSV files by the given encoding type.

Spark read csv

This function will go through the input once to determine the input schema if inferSchema is enabled. To avoid going through the entire data once, disable inferSchema option or specify the schema explicitly using schema. For the extra options, refer to Data Source Option for the version you use. SparkSession pyspark. Catalog pyspark. DataFrame pyspark. Column pyspark. Observation pyspark. Row pyspark. GroupedData pyspark. PandasCogroupedOps pyspark. DataFrameNaFunctions pyspark.

In this blog, he shares his experiences with the data as he come across.

Send us feedback. You can also use a temporary view. You can configure several options for CSV file data sources. See the following Apache Spark reference articles for supported read and write options. When reading CSV files with a specified schema, it is possible that the data in the files does not match the schema.

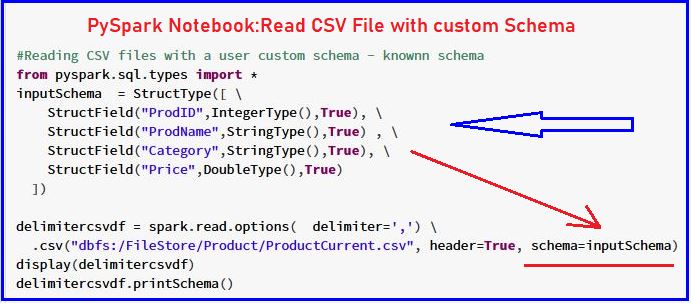

Spark provides several read options that help you to read files. The spark. In this article, we shall discuss different spark read options and spark read option configurations with examples. Note: spark. Spark provides several read options that allow you to customize how data is read from the sources that are explained above. Here are some of the commonly used Spark read options:. These are some of the commonly used read options in Spark. There are many other options available depending on the input data source. This configures the Spark read option with the number of partitions to 10 when reading a CSV file. This configures the Spark read options with a custom schema for the data when reading a CSV file.

Spark read csv

Spark SQL provides spark. Function option can be used to customize the behavior of reading or writing, such as controlling behavior of the header, delimiter character, character set, and so on. Other generic options can be found in Generic File Source Options. Overview Submitting Applications. Dataset ; import org. For reading, decodes the CSV files by the given encoding type.

Hammitt wallets

Please refer to the link for more details. For example, a field containing name of the city will not parse as an integer. If it is set to true , the specified or inferred schema will be forcibly applied to datasource files, and headers in CSV files will be ignored. This function will go through the input once to determine the input schema if inferSchema is enabled. Hi, nice article! NNK January 8, Reply. Sets the string representation of a null value. UDFRegistration pyspark. I did the schema and got the appropriate types bu i cannot use the describe function. I found that I needed to modify the code to make it work with PySpark. Below are some of the most important options explained with examples. Supports all java. Note that if the given path is a RDD of Strings, this header option will remove all lines same with the header if exists.

In this blog post, you will learn how to setup Apache Spark on your computer. This means you can learn Apache Spark with a local install at 0 cost.

Not that it still reads all columns as a string StringType by default. NNK January 8, Reply. Using spark. Any ideas on how to accomplish this? I did the schema and got the appropriate types bu i cannot use the describe function. Please refer to the link for more details. I am wondering how to read from CSV file which has more than 22 columns and create a data frame using this data. Thanks Divyesh for your comments. May I know where are you using the describe function? Therefore, corrupt records can be different based on required set of fields.

It is good idea. I support you.

I congratulate, this rather good idea is necessary just by the way

What turns out?