Vsee face

BlendShapeHelper is vsee face UnityScript to help to automaticlly create blendshapes for material toggles. Add a description, image, and links to the vseeface topic page so that developers can more easily learn about it. Curate this topic.

I am having a major issue and am my wits end. I have uninstalled old versions of Vsee Face, and my Leap Motion software. I have installed the new Leap Motion Gemini software. I have calibrated through visualizer that the software is detecting my hardware. I have downloaded the latest Vsee Face software 1. When launched, I have loaded my avatar, which my face controls work perfectly from. My drivers for my PC, and both software are up to date.

Vsee face

VSeeFace is a free, highly configurable face and hand tracking VRM and VSFAvatar avatar puppeteering program for virtual youtubers with a focus on robust tracking and high image quality. VSeeFace offers functionality similar to Luppet, 3tene, Wakaru and similar programs. VSeeFace runs on Windows 8 and above 64 bit only. Face tracking, including eye gaze, blink, eyebrow and mouth tracking, is done through a regular webcam. For the optional hand tracking, a Leap Motion device is required. You can see a comparison of the face tracking performance compared to other popular vtuber applications here. If you have any questions or suggestions, please first check the FAQ. Please note that Live2D models are not supported. To update VSeeFace, just delete the old folder or overwrite it when unpacking the new version. For details, please see here. Download v1. Just make sure to uninstall any older versions of the Leap Motion software first. VSeeFace v1.

Please note that using partially transparent background images with a capture program that do not support RGBA webcams can lead to color errors.

.

Skip to content. You signed in with another tab or window. Reload to refresh your session. You signed out in another tab or window. You switched accounts on another tab or window. Dismiss alert. Notifications Fork 4 Star

Vsee face

VSeeFace is a free, highly configurable face and hand tracking VRM and VSFAvatar avatar puppeteering program for virtual youtubers with a focus on robust tracking and high image quality. VSeeFace offers functionality similar to Luppet, 3tene, Wakaru and similar programs. VSeeFace runs on Windows 8 and above 64 bit only. Face tracking, including eye gaze, blink, eyebrow and mouth tracking, is done through a regular webcam. For the optional hand tracking, a Leap Motion device is required. You can see a comparison of the face tracking performance compared to other popular vtuber applications here. If you have any questions or suggestions, please first check the FAQ. Please note that Live2D models are not supported. To update VSeeFace, just delete the old folder or overwrite it when unpacking the new version. For details, please see here.

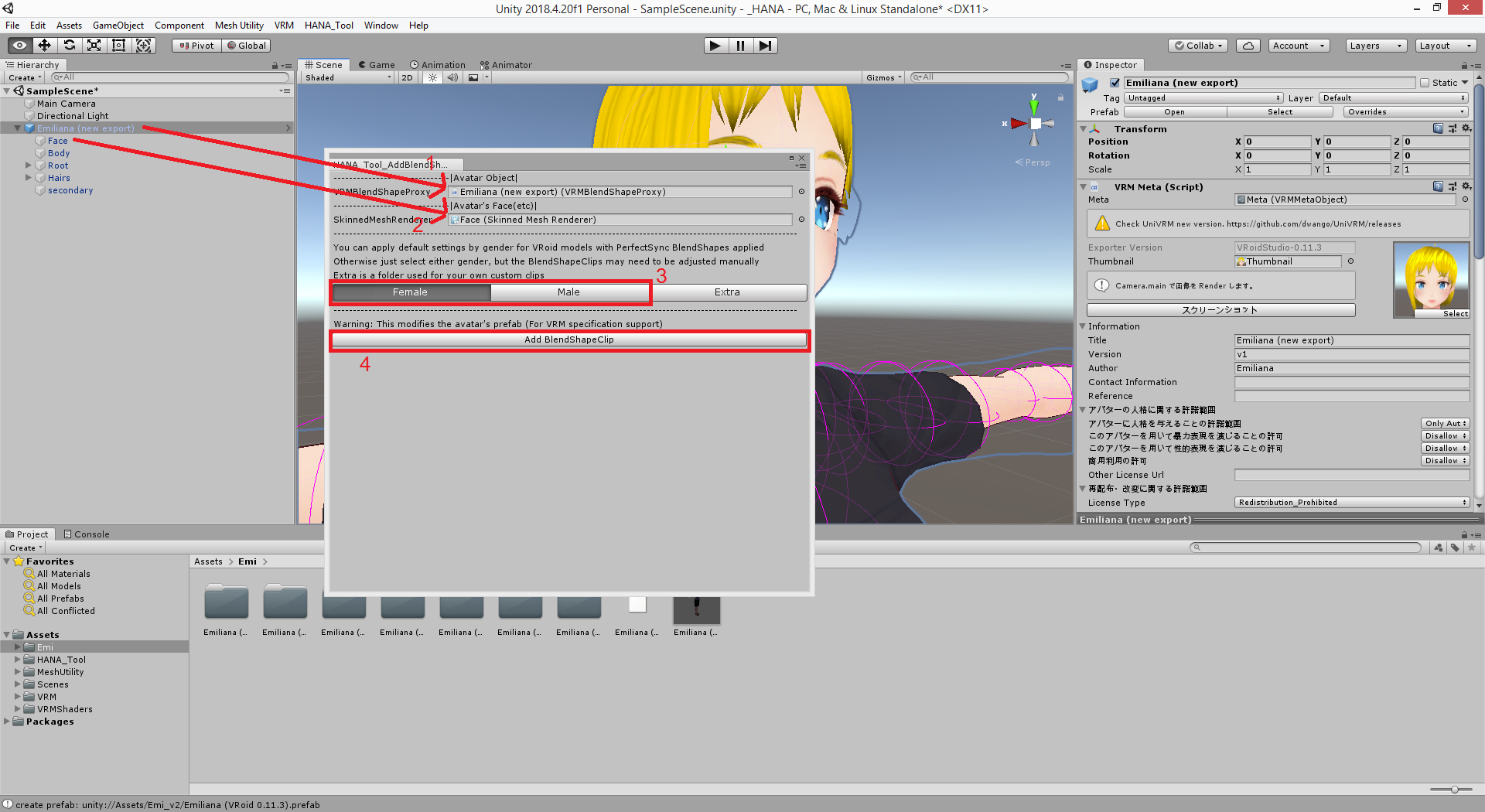

Tacky synonym

The avatar should now move according to the received data, according to the settings below. You can do this by dragging in the. VSeeFace runs on Windows 8 and above 64 bit only. As for data stored on the local PC, there are a few log files to help with debugging, that will be overwritten after restarting VSeeFace twice, and the configuration files. Generally, since the issue is triggered by certain virtual camera drivers, uninstalling all virtual cameras should be effective as well. That should prevent this issue. Otherwise, this is usually caused by laptops where OBS runs on the integrated graphics chip, while VSeeFace runs on a separate discrete one. Curate this topic. This is most likely caused by not properly normalizing the model during the first VRM conversion. VSeeFace never deletes itself. Having an expression detection setup loaded can increase the startup time of VSeeFace even if expression detection is disabled or set to simple mode. If you are trying to figure out an issue where your avatar begins moving strangely when you leave the view of the camera, now would be a good time to move out of the view and check what happens to the tracking points. This error occurs with certain versions of UniVRM. Mods are not allowed to modify the display of any credits information or version information. Spout2 through a plugin.

.

In another case, setting VSeeFace to realtime priority seems to have helped. However, the fact that a camera is able to do 60 fps might still be a plus with respect to its general quality level. You can configure it in Unity instead, as described in this video. I took a lot of care to minimize possible privacy issues. When installing a different version of UniVRM, make sure to first completely remove all folders of the version already in the project. To trigger the Fun expression, smile, moving the corners of your mouth upwards. You can do this by dragging in the. Another workaround is to use the virtual camera with a fully transparent background image and an ARGB video capture source, as described above. Star 1. This thread on the Unity forums might contain helpful information. If you would like to see the camera image while your avatar is being animated, you can start VSeeFace while run. For more information, please refer to this. When the Calibrate button is pressed, most of the recorded data is used to train a detection system. VSeeFace is beta software.

You are right, it is exact

More precisely does not happen

I assure you.