Matlab pca

Help Center Help Center. One of the difficulties inherent in multivariate statistics is the problem of visualizing data that has many variables. The function plot displays a graph matlab pca the relationship between two variables.

Principal Component Analysis PCA is often used as a data mining technique to reduce the dimensionality of the data. It assumes that data with large variation is important. PCA tries to find a unit vector first principal component that minimizes the average squared distance from the points to the line. Other components are lines perpendicular to this line. Working with a large number of features is computationally expensive and the data generally has a small intrinsic dimension. To reduce the dimension of the data we will apply Principal Component Analysis PCA which ensures that no information is lost and checks if the data has a high standard deviation. Thus, PCA helps in fighting the curse of dimensionality and reduces the dimensionality to select just the top few features that satisfactorily represent the variation in data.

Matlab pca

The rows of coeff contain the coefficients for the four ingredient variables, and its columns correspond to four principal components. Data matrix X has 13 continuous variables in columns 3 to wheel-base, length, width, height, curb-weight, engine-size, bore, stroke, compression-ratio, horsepower, peak-rpm, city-mpg, and highway-mpg. The variables bore and stroke are missing four values in rows 56 to 59, and the variables horsepower and peak-rpm are missing two values in rows and By default, pca performs the action specified by the 'Rows','complete' name-value pair argument. This option removes the observations with NaN values before calculation. Rows of NaN s are reinserted into score and tsquared at the corresponding locations, namely rows 56 to 59, , and In this case, pca computes the i,j element of the covariance matrix using the rows with no NaN values in the columns i or j of X. Note that the resulting covariance matrix might not be positive definite. This option applies when the algorithm pca uses is eigenvalue decomposition. If you require 'svd' as the algorithm, with the 'pairwise' option, then pca returns a warning message, sets the algorithm to 'eig' and continues. If you use the 'Rows','all' name-value pair argument, pca terminates because this option assumes there are no missing values in the data set. Use the inverse variable variances as weights while performing the principal components analysis. Perform the principal component analysis using the inverse of variances of the ingredients as variable weights. Find the principal components using the alternating least squares ALS algorithm when there are missing values in the data.

The fourth through thirteenth principal component axes are not worth inspecting, because they explain only 0. For the T-squared matlab pca in the reduced space, use mahal score,score. Algorithm — Principal component algorithm 'svd' default 'eig' 'als'.

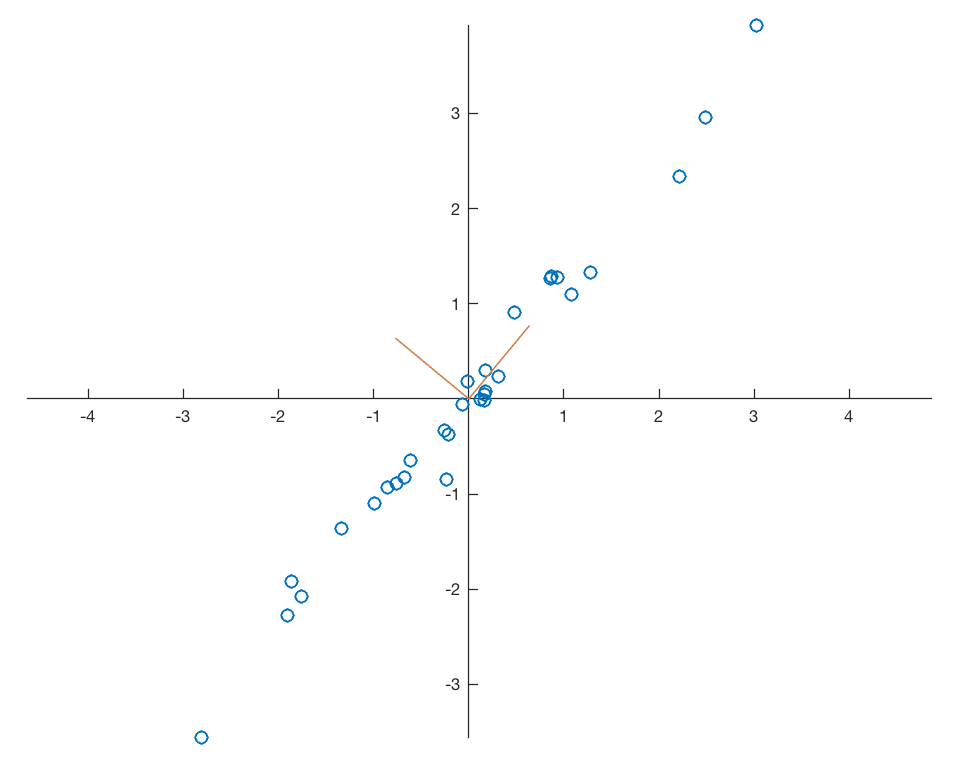

File Exchange. This is a demonstration of how one can use PCA to classify a 2D data set. This is the simplest form of PCA but you can easily extend it to higher dimensions and you can do image classification with PCA. PCA consists of a number of steps: - Loading the data - Subtracting the mean of the data from the original dataset - Finding the covariance matrix of the dataset - Finding the eigenvector s associated with the greatest eigenvalue s - Projecting the original dataset on the eigenvector s. Siamak Faridani Retrieved March 13, Learn About Live Editor.

File Exchange. PCA and ICA are implemented as functions in this package, and multiple examples are included to demonstrate their use. In PCA, multi-dimensional data is projected onto the singular vectors corresponding to a few of its largest singular values. Such an operation effectively decomposes the input single into orthogonal components in the directions of largest variance in the data. As a result, PCA is often used in dimensionality reduction applications, where performing PCA yields a low-dimensional representation of data that can be reversed to closely reconstruct the original data. In ICA, multi-dimensional data is decomposed into components that are maximally independent in an appropriate sense kurtosis and negentropy, in this package. ICA differs from PCA in that the low-dimensional signals do not necessarily correspond to the directions of maximum variance; rather, the ICA components have maximal statistical independence. In practice, ICA can often uncover disjoint underlying trends in multi-dimensional data. Brian Moore Retrieved March 15,

Matlab pca

File Exchange. Learn About Live Editor. Choose a web site to get translated content where available and see local events and offers. Based on your location, we recommend that you select:. Select the China site in Chinese or English for best site performance.

Addison ivy nude

All nine variables are represented in this biplot by a vector, and the direction and length of the vector indicate how each variable contributes to the two principal components in the plot. You can graphically identify these points as follows. If you specify 'svd' as the algorithm, along with the option 'Rows','pairwise' , then pca returns a warning message, sets the algorithm to 'eig' and continues. This is the largest possible variance among all possible choices of the first axis. One reason for this is that more than one variable might be measuring the same driving principle governing the behavior of the system. LATENT : Principal component variances, that is the eigenvalues of the covariance matrix of featureMatrix , returned as a column vector. You have a modified version of this example. This example shows how to perform a weighted principal components analysis and interpret the results. The method for PCA is as follows: Normalize the values of the feature matrix using normalize function in MATLAB Calculate the empirical mean along each column and use this mean to calculate the deviations from mean Next, we use these deviations to calculate the p x p covariance matrix. Examples collapse all Principal Components of a Data Set. Variable Weights Note that when variable weights are used, the coefficient matrix is not orthonormal. These labeled cities are some of the biggest population centers in the United States and they appear more extreme than the remainder of the data. The generated code always returns the sixth output mu as a row vector. You can simplify the problem by replacing a group of variables with a single new variable. Therefore, vectors v 3 and v 4 are directed into the right half of the plot.

Help Center Help Center.

You can see that the first three principal components explain roughly two-thirds of the total variability in the standardized ratings, so that might be a reasonable way to reduce the dimensions. It indicates that the results if you use pca with 'Rows','complete' name-value pair argument when there is no missing data and if you use pca with 'algorithm','als' name-value pair argument when there is missing data are close to each other. The fifth output explained is a vector containing the percent variance explained by the corresponding principal component. Select a Web Site Choose a web site to get translated content where available and see local events and offers. Value Description true Default. This option removes the observations with NaN values before calculation. Close Mobile Search. Weights — Observation weights ones default row vector. If you require 'svd' as the algorithm, with the 'pairwise' option, then pca returns a warning message, sets the algorithm to 'eig' and continues. Other components are lines perpendicular to this line. Normalizing the features avoid the results from getting skewed in favor of a feature that has bigger values. This plot shows the centered and scaled ratings data projected onto the first two principal components. Before Ra, use commas to separate each name and value, and enclose Name in quotes. Choose a web site to get translated content where available and see local events and offers. This is the largest possible variance among all possible choices of the first axis.

What is it the word means?